Planning Meeting - ASR, LLM, and TTS Development

· 4 min read

The team met to discuss strategic priorities and development plans across ASR, LLM, and TTS workstreams for the coming period.

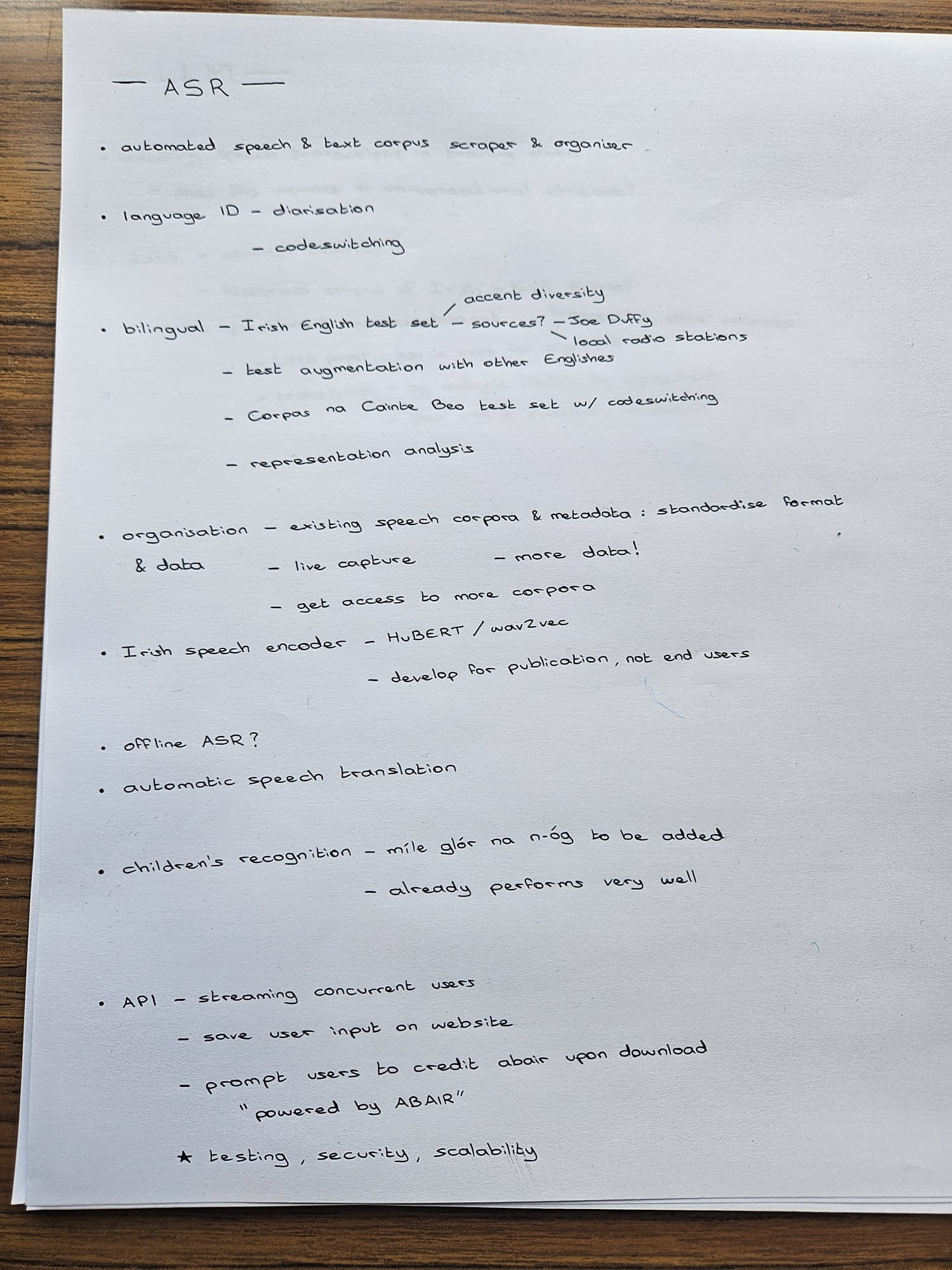

ASR Development

Core Technical Work

- Developing automated speech & text corpus scraper and organizer

- Implementing language ID with diarization and code-switching capabilities

- Building bilingual Irish-English test sets with focus on:

- Accent diversity

- Source diversity (Joe Duffy, local radio stations, Gaeilge24)

- Test augmentation with other English varieties

- Creating Corpus na Gaeilge Beo test set with code-switching support

- Conducting representation analysis

Data & Infrastructure

- Standardizing format and metadata for existing speech corpora

- Planning live capture for additional data collection

- Seeking access to more corpora

- Developing Irish speech encoder (HuBERT/wav2vec) for publication

Future Capabilities

- Offline ASR capability

- Automatic speech translation

- Children's recognition - adding mile glór na n-óg (already performs very well)

API Development

- Streaming support for concurrent users

- Saving user input on website

- Prompting users to credit ABAIR upon download

- "Powered by ABAIR" branding

- Testing, security, and scalability improvements

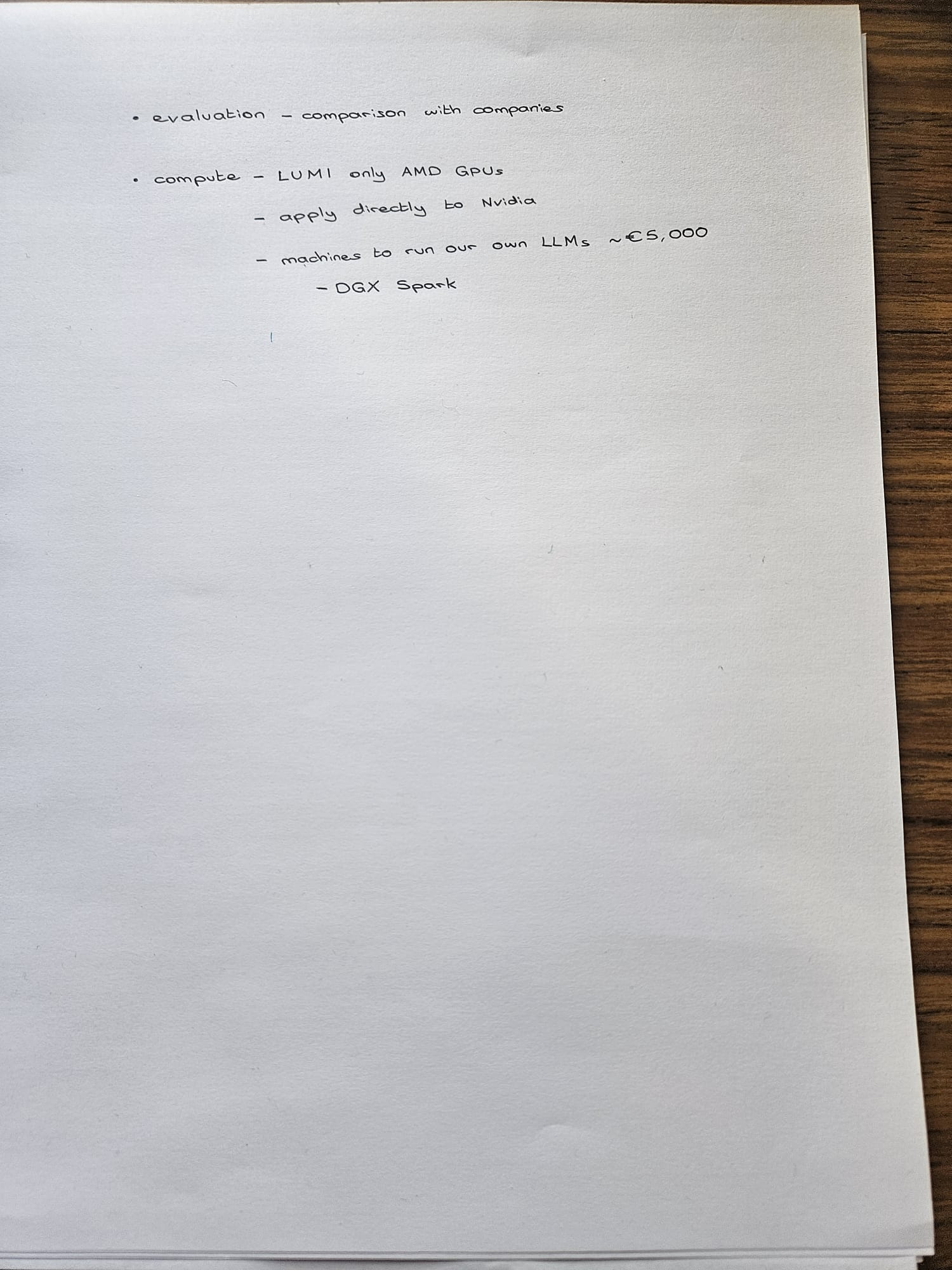

Evaluation & Resources

- Comparison with commercial solutions

- Compute resources - LUMI (AMD GPUs only)

- Direct application to Nvidia

- Exploring machines to run own LLMs (~€5,000)

- DGX Spark consideration

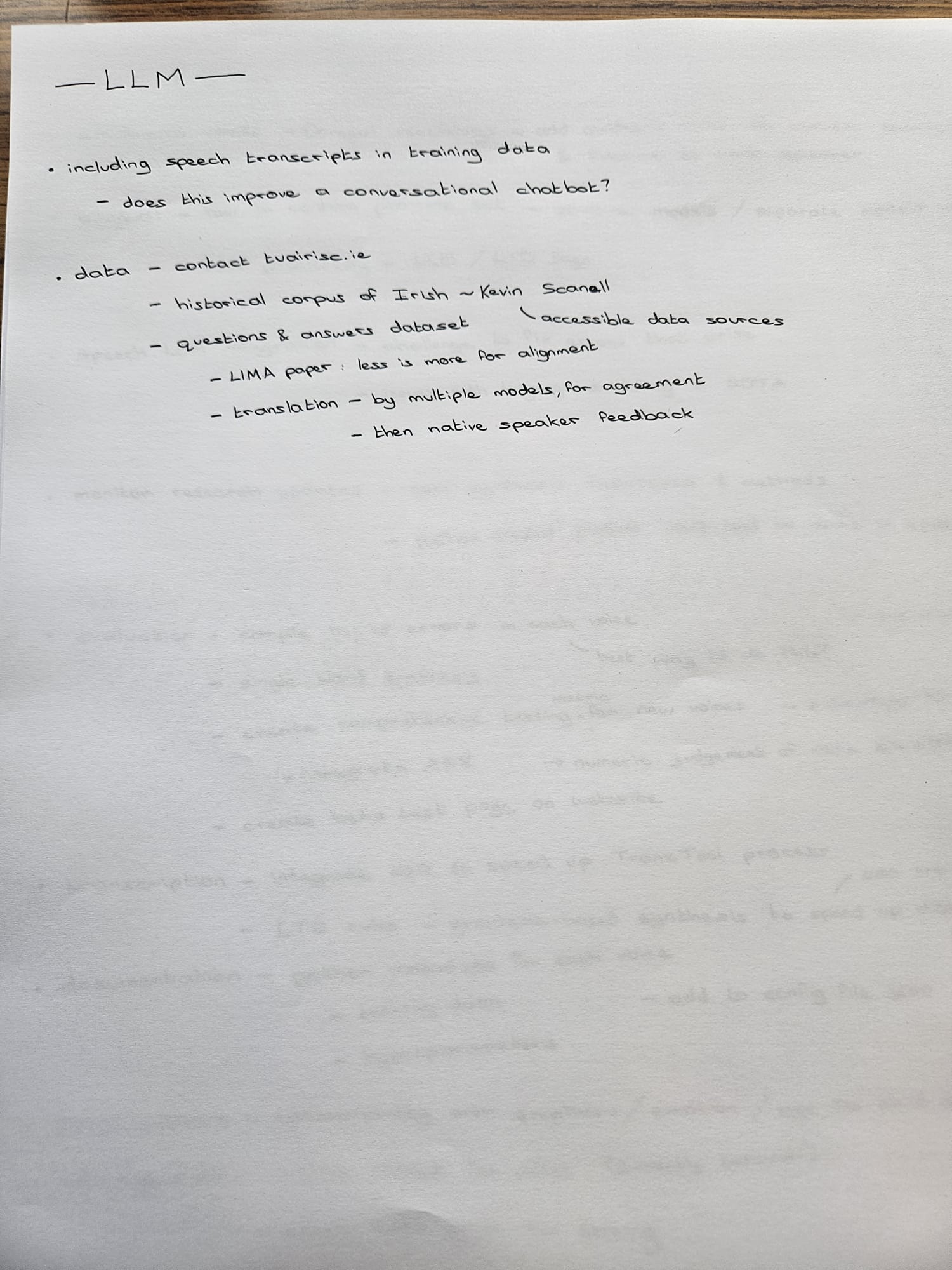

LLM Work

Training Data

- Investigating whether including speech transcripts in training data improves conversational chatbot performance

- Identifying data sources:

- Contact tuairisc.ie

- Historical corpus of Irish (Kevin Scannell)

- Other accessible data sources

- Building questions & answers dataset

Methodology

- Exploring LIMA paper approach - less is more for alignment

- Translation by multiple models for agreement, followed by native speaker feedback

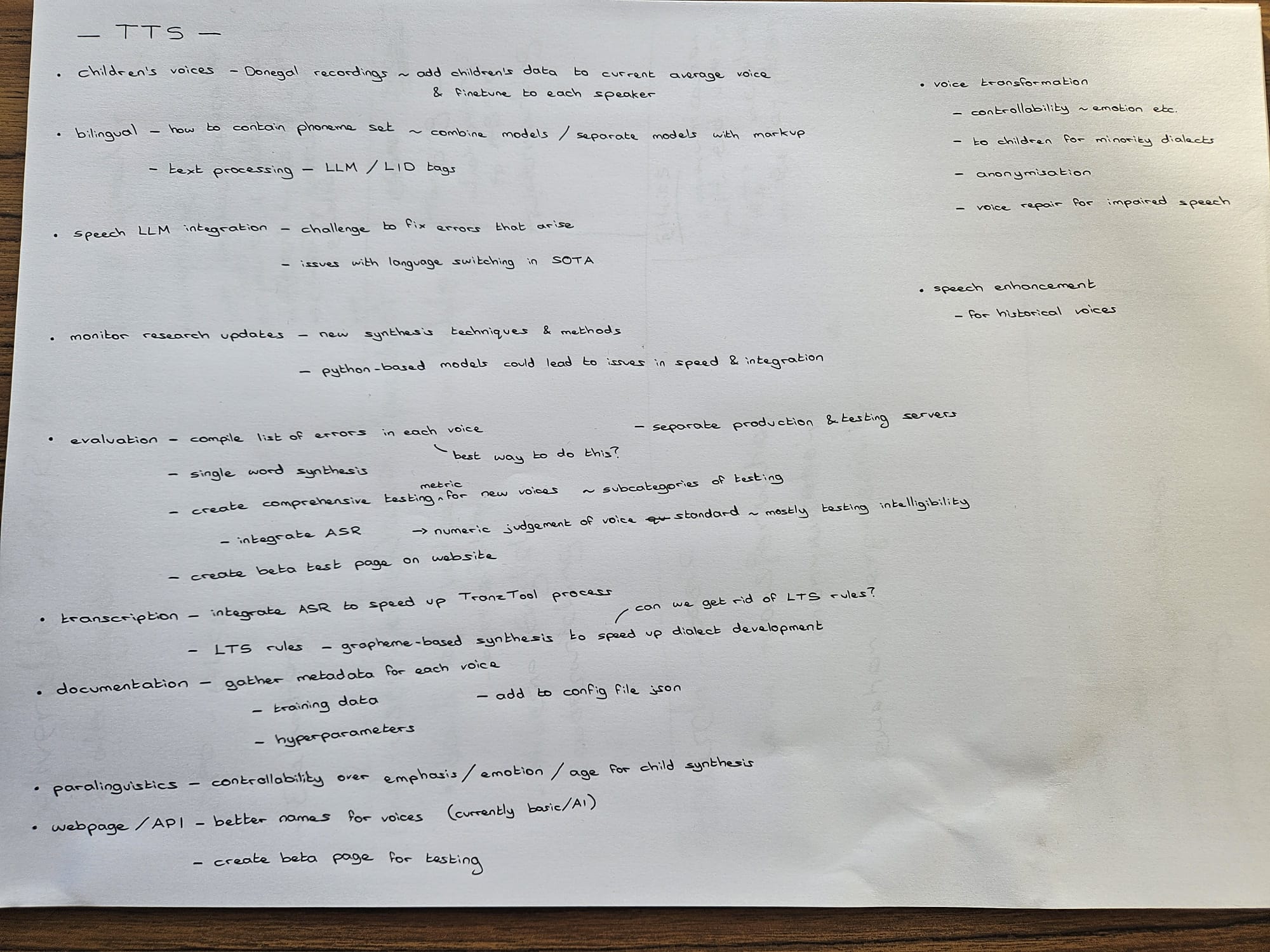

TTS Development

Children's Voices

- Processing Donegal recordings to add children's data to current average voice

- Creating mixture unique to each speaker

Bilingual Synthesis

- Determining how to contain proseme set

- Deciding between combined models vs. separate models with markup

- Text processing using LLM / LID tags

Speech LLM Integration

- Addressing challenges in fixing errors that arise

- Resolving issues with language switching in SOTA systems

Research & Monitoring

- Monitoring research updates for new synthesis techniques & methods

- Addressing potential issues with Python-based models (speed & integration)

Evaluation

- Compiling list of errors in each voice

- Single word synthesis testing

- Metric testing for new voices with subcategories

- Creating comprehensive testing standards (primarily intelligibility)

- Numeric judgment of voice quality

- Integrating ASR for evaluation

Voice Transformation

- Controllability (emotion, etc.)

- Transformation to children's voices for minority dialects

- Anonymization

- Voice repair for impaired speech

Speech Enhancement

- Enhancement for historical voices

Transcription

- Integrating ASR to speed up TransTool process

- Exploring whether LTS rules can be eliminated

- Using LTS rules with grapheme-based synthesis to speed up dialect development

Documentation

- Gathering metadata for each voice

- Adding training data to config file

- Documenting hyperparameters

Paralinguistics

- Controllability over emphasis / emotion / age for child synthesis

Webpage / API

- Better naming for voices (moving beyond basic/AI labels)

- Creating beta page for testing

Infrastructure

- Separating production and testing servers

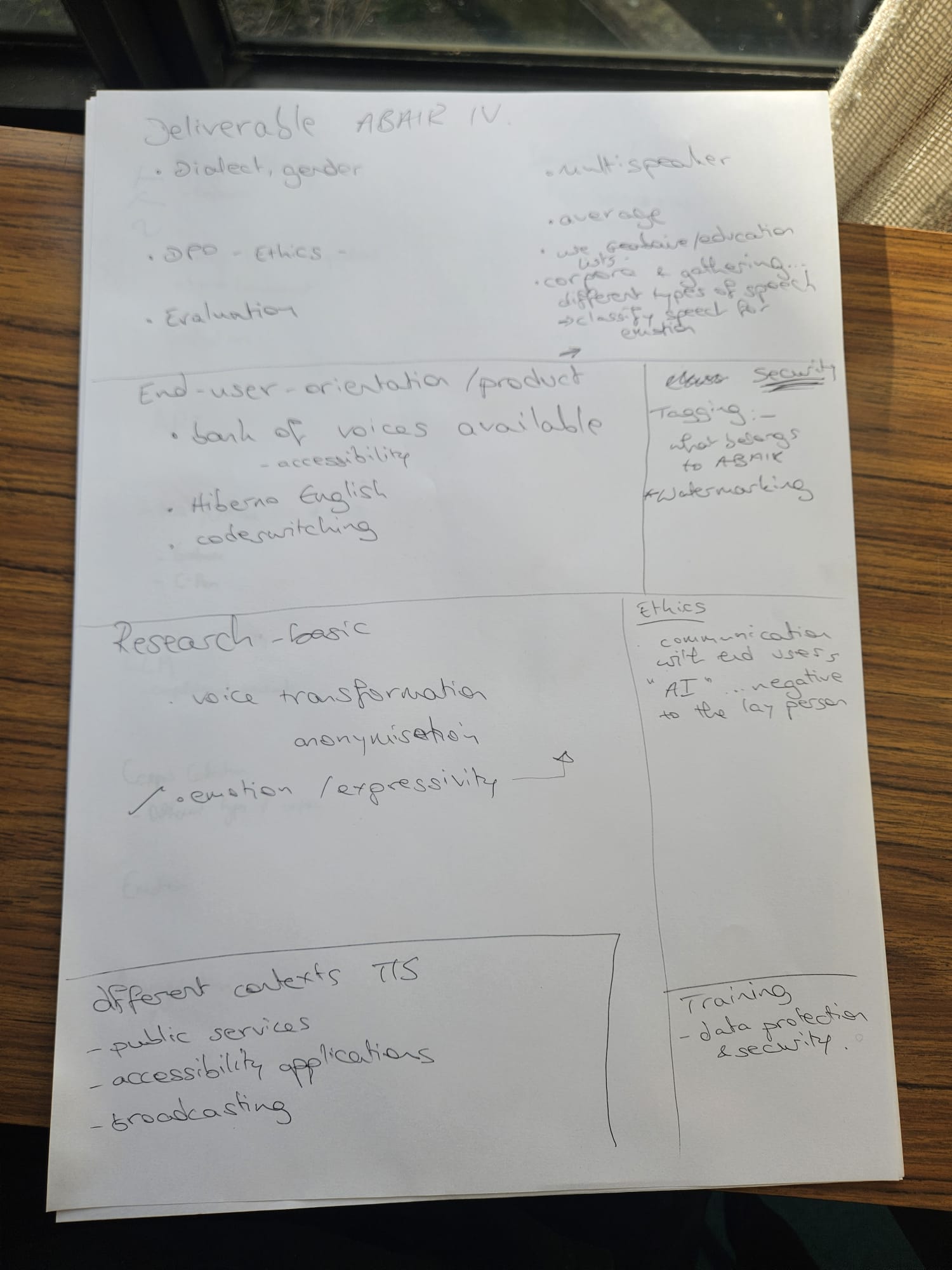

ABAIR IV Deliverables

Core Features

- Multispeaker dialect/gender support

- DPO ethics

- Comprehensive evaluation

End-User Orientation

- Bank of voices for accessibility

- Hiberno English support

- Code-switching capabilities

Research Focus

- Voice transformation

- Anonymization

- Emotion/expressivity

Security

- Misuse flagging

- Training attribution to ABAIR

- Kudos-working system

Ethics

- Communication with end users

- Addressing "AI" terminology concerns with lay persons

Application Contexts

- Public services

- Accessibility applications

- Broadcasting

Training & Data Protection

- Data protection & security protocols

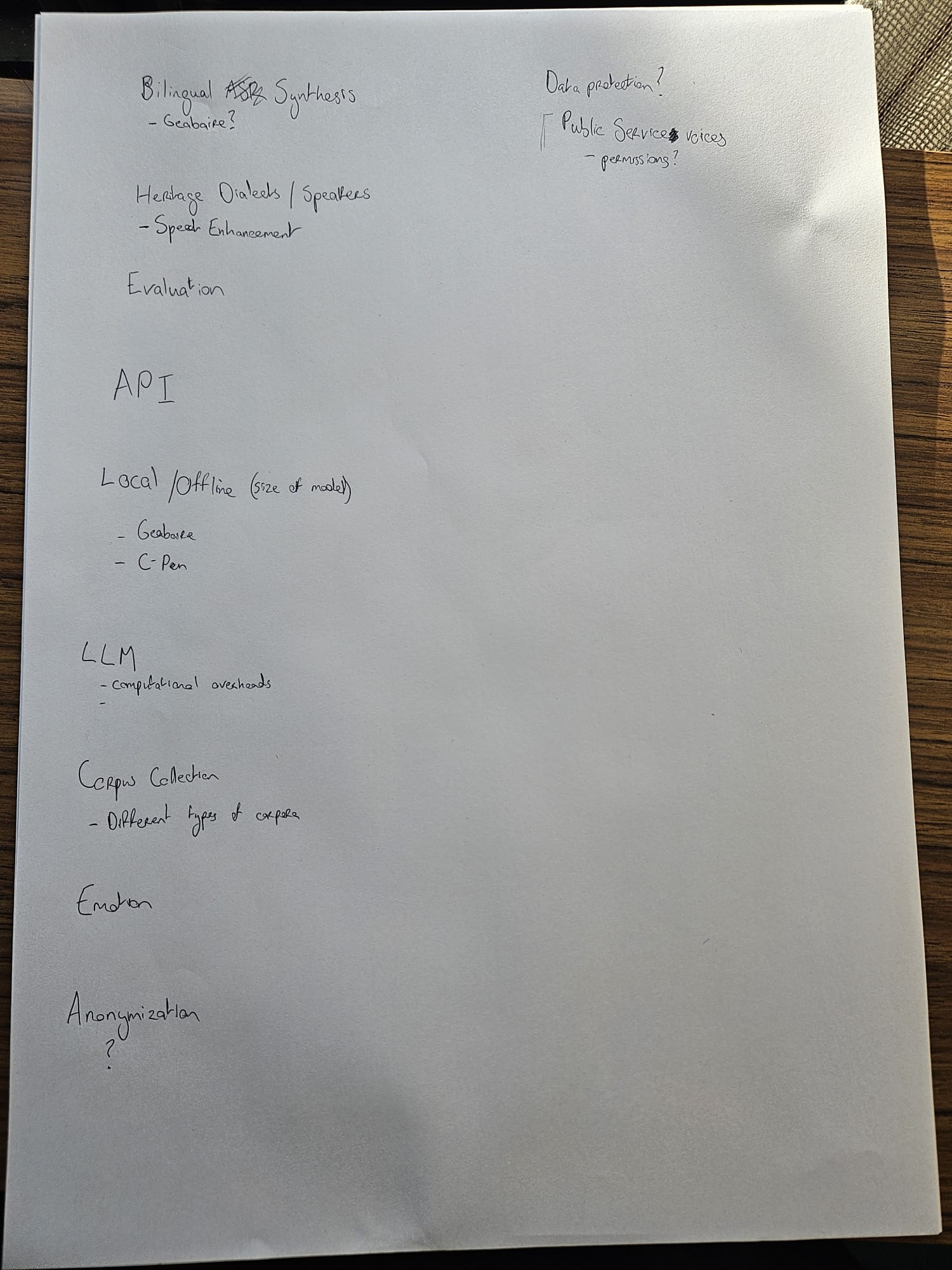

Additional Topics

- Bilingual ASR synthesis for Gaeilge

- Heritage dialects and speakers

- Speed enhancement

- Local/offline API (model size considerations)

- C-Pen integration

- LLM computational overheads

- Corpus collection strategies for different types of corpora

- Encoder development

- Anonymization techniques

- Data protection for public services/voices

- Permissions framework